Hello together,

i am currently investigating how to safely operate a pool with as less as possible security vulnerabilities.

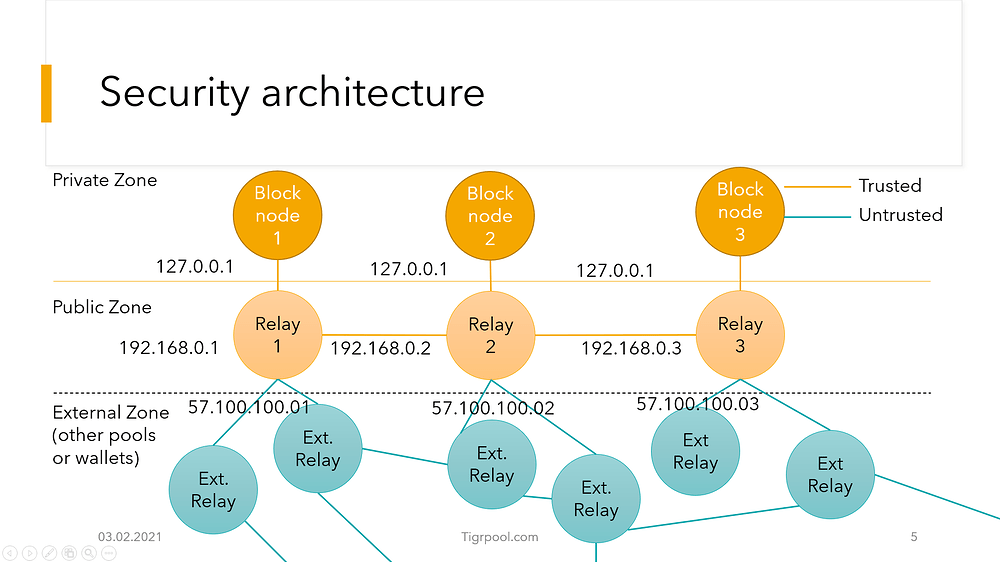

There is one setup which sounds very promising to me, as it encapsulated the Block Producing node complety from an external interface. Which is the main reason i like this approach the most. But as i am not an expert yet on the cardano node, i would like to ask you to look over it and share concerns ore improvements to that.

General Assumptions:

- Producing Node and Relay node are both managed via Docker (use of best practises also: like run container only as user, small as possible image, …)

- Iptables are setup to limit DDos possibilities and drop invalid requests and spoofing attacks.

- Producing Node binds on 127.0.0.1:3000 (only accessible on the same host)

- Relay Node binds on 0.0.0.0:3001 (accessible from the internet)

- Producing Node and Relay node are on the same machine, but isolated with docker.

The relay-topology json file would look like this:

{

“Producers”: [

{

“addr”: “127.0.0.1”,

“port”: 3000,

“valency”: 1

},

{

“addr”: “192.168.0.1”,

“port”: 3001,

“valency”: 1

},

{

“addr”: “192.168.0.2”,

“port”: 3001,

“valency”: 1

},

{

“addr”: “192.168.0.3”,

“port”: 3001,

“valency”: 1

}

]

}

The producing-topology would look like this:

{

“Producers”: [

{

“addr”: “127.0.0.1”,

“port”: 3001,

“valency”: 1

}

]

}

For registering the pool i would like to use:

cardano-cli shelley stake-pool registration-certificate

–cold-verification-key-file cold.vkey

–vrf-verification-key-file vrf.vkey

–pool-pledge

–pool-cost

–pool-margin

–pool-reward-account-verification-key-file stake.vkey

–pool-owner-stake-verification-key-file stake.vkey

–mainnet

*–pool-relay-ipv4 relays.tigrpool_com *

*–pool-relay-port 3001 *

–metadata-url …

–metadata-hash

–out-file …

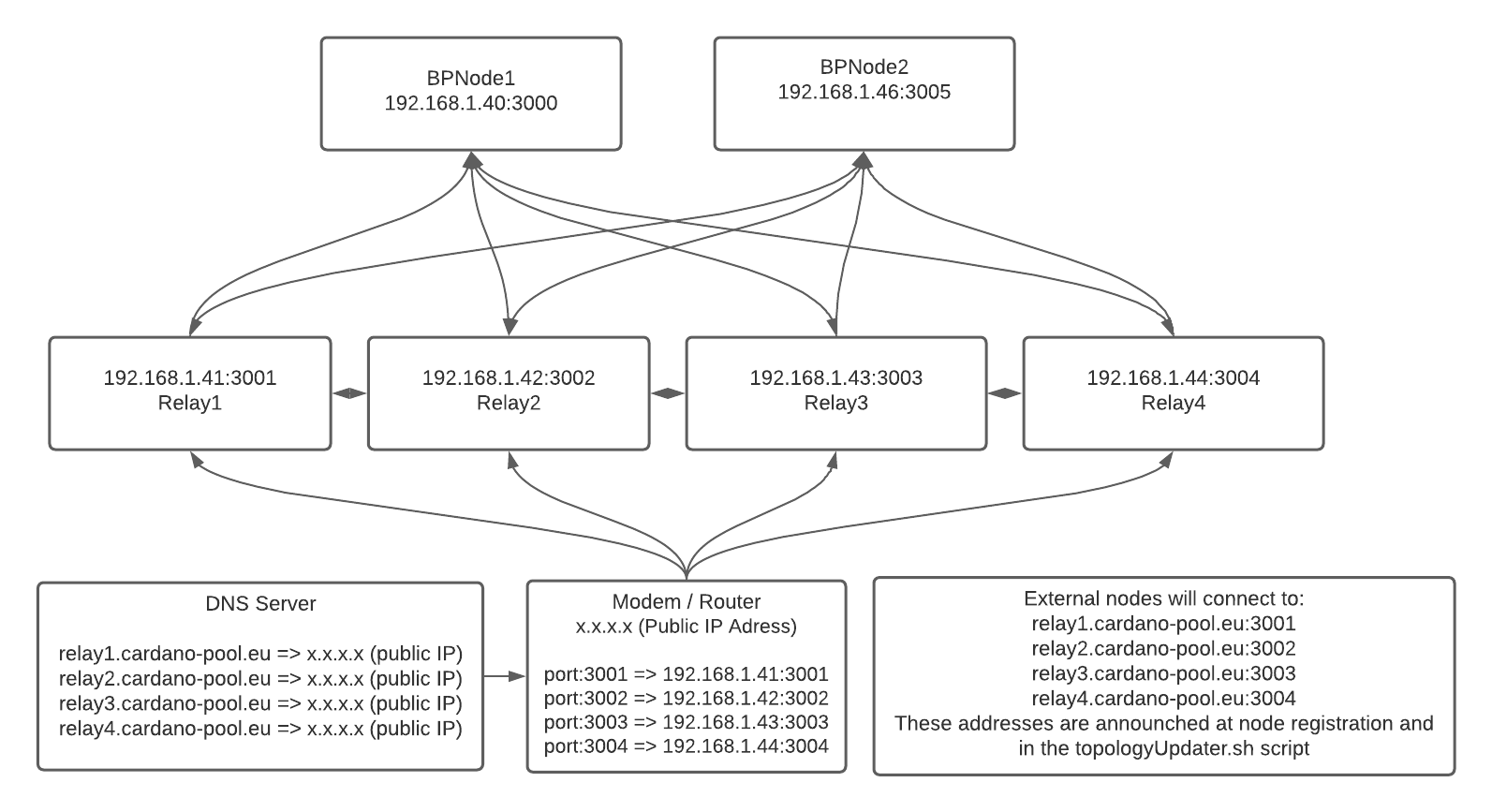

And on the URL relay.tigrpool.com i would then add three DNS records

57.100.100.1

57.100.100.2

57.100.100.3

Why do i prefer this setup ?

Connecting the Block node to multiple Relays might be possible, but mean that i need to open the port of the Block node to the open internet (which i dont think is a good idea). Isolating the node to only be available to nodes on a whitelist (firewall) is an option, but if the firewall does not work or is misconfigured (unlikely, but possible - even with docker!) the Block node will then be open to the internet (worst case).

Why not connect the Blocks and Relays in the 192.168.0.0/16 subnet ?

It’s a possibility but i dont see a benefit yet. Due to the fact that Relay 1 - Relay 3 are connected they should do all the heavy traffic lifting, while the block node only speaks with one Relay via 127.0.0.1.

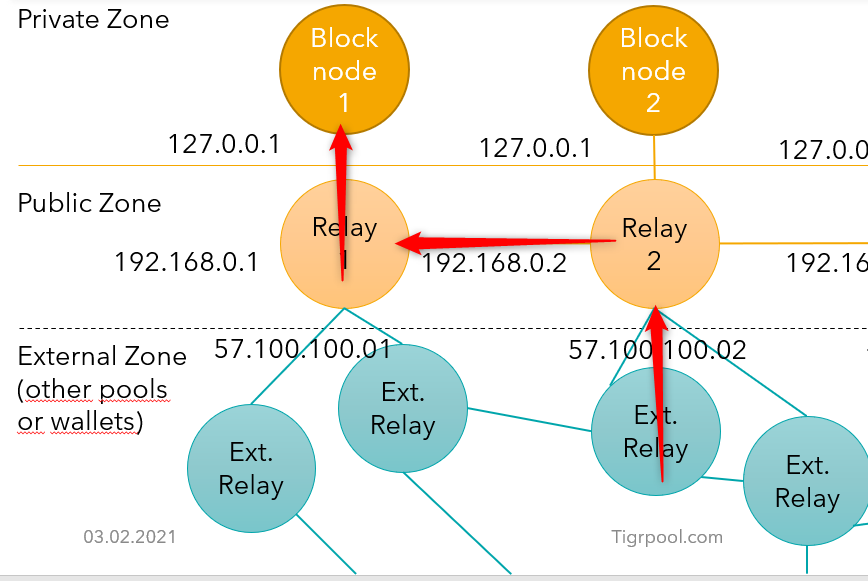

If Relay1 is down the Block node wont be able to operate - why not use two or more Relays ?

Yeah that is right, but it depends why the relay node is down.

If the Relay crashes it’s automatically respawned by Docker.

If the Relay node is under DDos it’s external interface is blocked and no external or internal traffic will leave the server. (Exception would be if i connect the node via an external ip to other relay nodes, stated above. But is availability more important than security ? - dont think so.)

If the drive of the machine breaks (for whatever reason) it’s also impossible to operate the node further even if multiple relays exist.

Now my questions:

- First of all do you consider this as also being good in terms of security ?

- Are there any weaknesses which i might have missed ?

- see post below with picture