Hello new Pool Operators!

Today I want to share with you a possible way to fix the issue: relays node setup completed but Processed TX : 0 (no transactions processed).

If you are new you must know that after the node was started for the first time, you need to sync it with the network. When the sync has been completed, you need to announce your Relays into the network and this will be done with topologyupdater script (the test network has to get along without the P2P network module for the time being, it needs static topology files. This “TopologyUpdater” service, which is far from being perfect due to its centralization factor, is intended to be a temporary solution to allow everyone to activate their relay nodes without having to postpone and wait for manual topology completion requests.)

!!! The topologyupdater shell script must be executed on the relay node as a cronjob exactly every 60 minutes. After 4 consecutive requests (3 hours) the node is considered a new relay node in listed in the topology file. If the node is turned off, it’s automatically delisted after 3 hours !!!

(

Another important thing could be that u are using IPv6 and u have to change the node arguments

Also u need to edit your topologyupdater script

if you run your node in IPv4/IPv6 dual stack network configuration and want announced the

IPv4 address only please add the -4 parameter to the curl command below (curl -4 -s …)

Eg:

curl -4 -s "https://api.clio.one/htopology/v1/?port=$…

)

If you look in logs/topologyupdater.log file you will see:

- first 3 requests (after 2 hours) will generate:

{ “resultcode”: “x”, “datetime”:“2020-12-08 11:13:02”, “clientIp”: “your_node_ip”, “iptype”: 4, “msg”: “nice to meet you …” } - 4th consecutive request (after 3 hours) will generate:

{ “resultcode”: “204”, “datetime”:“2020-12-08 11:13:02”, “clientIp”: “your_node_ip”, “iptype”: 4, “msg”:

“glad you’re staying with us” }

each time the script runs your topology.json file will be overwritten with new relays (REMEMBER to edit your BP_IP in topologyupdater script → CUSTOM_PEERS ) ALSO the topologyupdater script must run only on Relay nodes!

!!! if your CNODE_PORT is blocked by FW for incoming connections you will received the following message in topologyupdater.log file:

{ “resultcode”: “403”, “datetime”:“2020-12-06 08:11:03”, “clientIp”: “x.x.x.x”, “iptype”: 4, “msg”: “glad you want to stay with us, but please check and enable your IP:port reachability” }

at this point you need to check the FW (sudo ufw status) to see if the CNODE_PORT which has been set in env file is open and permit incoming connections. (sometimes port-fowarding needs to be configured if private IPs are used)

you can check here https://www.portcheckers.com/ (or from which site you wish)

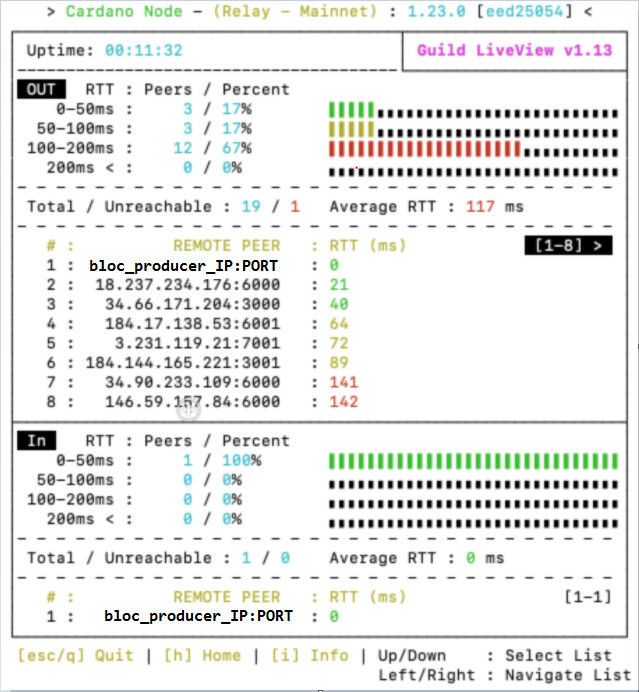

Now, if all looks good (topologyupdater.log and topology.json files)you can check with ./gliveview.sh to see your nodes statistics and should be like this:

As you can see you have informations about the type of node (BP or Relay), about epoch status, up-time, peers and the most important I think Processed TX This should not be 0 , it should increase!!!

Now if Processed TX are increasing that means your node communicating fine with nodes discovered and updated in topology.json file.

BUT if Processed TX NOT increasing means something is wrong and you need to investigate

if in gliveview looks like this, this means the relays from topology files don’t know you or can’t communicate with your node

as you can see everything looks fine except [IN] PEERS (here is just the BP connected)

already agreed that in topologyupdater.log files you are receiving the following message:

{ “resultcode”: “204”, “datetime”:“2020-12-08 11:13:02”, “clientIp”: “your_node_ip”, “iptype”: 4, “msg”:

“glad you’re staying with us” } (if not check your topologyupdater script!)

At this point you need to check why the nodes from topology.json file are not able to make connections with you.

One possible reason could be that your node is not known by them… and you must check if your node was added in the mainnet cardano relays topology file here: https://explorer.mainnet.cardano.org/relays/topology.json

If your node is not present that means something went wrong when you registered, and could be that your metadata HASH has been corrupted and don’t mach.

Using the following command, I was able to see that SMASH calculated the hash of the meta but that didn’t match the hash stated in the registration certificate (obviously swap out my pool ID for your own)

curl https://smash.cardano-mainnet.iohk.io/api/v1/errors/00333b89543da962cc92e7ffa45848f42a98f7276780670728ce5256

if your hash is ok the output will be

if your hash is NOT ok the output will be something like:

[{“time”:“08.12.2020. 13:13:42”,“retryCount”:18,“poolHash”:“ad198e1148b9cb1ccdbc935b00074e82eaf73a29e233095ad6bd25fdd7b2d7a0”,“cause”:"Hash mismatch from poolId ‘00333b89543da962cc92e7ffa45848f42a98f7276780670728ce5256’ when fetching metadata from ‘https://url_where_you_uploaded/metadata.json’…

Incidentally, you can also check that SMASH can see your meta with the following:

curl https://smash.cardano-mainnet.iohk.io/api/v1/metadata/[your-pool-id-hash]/[your-pool-meta-data-hash]

If there’s a match, it will echo back the contents of your meta file. If you get nothing back, SMASH doesn’t know you.

Try to upload again the metadata.json file and be carefuller to not change anything because also the HASH will change and will NOT match!

IF HASH is ok after you uploaded the metadata again, you should see in gliveview:

This means you are now ready to create BLOCKS! GOOD LUCK!

I met another case this days when the Relay had IN / OUT PEERS but no tx incrementing … this was fixed by editing the configuration.json file

TraceLocalTxSubmissionProtocol”: false, → true

“TraceLocalTxSubmissionServer”: false, → true

FOR BP tsoot check:

I hope this will be useful for the new Pool Operators.

####################################################

Thank you and if you want to support us please delegate with

Charity Pool ticker: CHRTY

Earn rewards by helping others! Support children education!

Support decentralization!

####################################################